Is fine-tuning essential for reflection in LLMs?

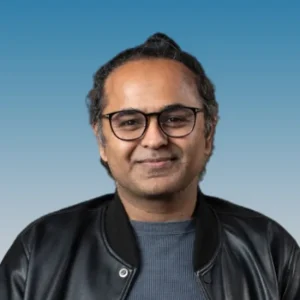

Turns out, it might not be. New research from Essential AI, co-founded by Ashish Vaswani and Niki Parmar, challenges a major assumption in the world of language models: that self-correction requires reinforcement learning or complex fine-tuning.

So what did they do differently?

In their study, “Rethinking Reflection in Pre-Training,” the team trained their OLMo-2 model (a 7B parameter LLM trained on 4 trillion tokens) using flawed datasets in math and logic—no special rewards, no extra fine-tuning. And yet, during pre training, the model learned to self-correct, using natural cues like “wait” to pause and re-evaluate its answers.

What kind of benchmarks did it pass?

OLMo-2 was tested on six reasoning benchmarks, where it demonstrated in-task correction abilities. Even more striking—the model’s reflective ability scaled with size. Bigger models learned to reflect better, all during standard pre training.

Why does this matter?

This insight cracks open new thinking in AI alignment and reasoning architectures. If reflection can be learned from data patterns alone—without structured reinforcement—it suggests large models are capable of deeper forms of cognitive emergence than previously assumed.

Where is Essential AI heading with this?

Backed by Google, Thrive Capital, and AMD, Essential AI is quietly building full-stack tools that go beyond chatbot interfaces. Their goal? To automate repetitive tasks across the enterprise, while enabling models to think more like humans—with the ability to pause, revise, and reason.

How does this compare to other players?

While groups like Anthropic, DeepMind, and Meta AI are pushing alignment through safety tuning, interpretability layers, or reinforcement-based feedback, Essential is showing that the pre training phase alone may hold untapped potential for emergent reasoning—if trained with the right cues.

What does this mean for developers and researchers?

It could dramatically streamline the training pipeline—less reliance on post-hoc fine-tuning, more focus on curating thoughtful pre training data. For enterprises, this might mean faster deployment of smarter agents that need less babysitting.

Bottom line?

Self-reflection might be a natural byproduct of scale and context—not a post-processing step.

Essential AI is nudging us toward a future where smarter models don’t just respond—they reconsider, mid-sentence.

Source: Essential AI